When a 1970s computer beats ChatGPT in a game of chess, maybe it’s time to stop and think about our biological superpowers.

The plot twist nobody expected (but we probably should have)

Picture the scene: ChatGPT, the artificial intelligence that shook the foundations of the tech world, virtually sits down at a chessboard to face… a 1977 Atari computer. Yes, you read that right: a machine born in the ’70s, when smartphones were science fiction and the internet was still called ARPANET.

The result? The Atari 2600 checkmated ChatGPT as if it were a beginner at their first neighborhood club tournament. But while headlines immediately started shouting “AI IS OVER!” with all the subtlety of a jackhammer, we prefer to do what we do best: dig beneath the surface and understand what this really means.

This “defeat” is anything but proof that AI is useless, contrary to what’s being said lately. Instead, it gives us the perfect excuse to start a reflection: what if the most versatile machine in the world, right now, were still our brain?

We’ll dig deeper into this topic from a scientific point of view later in the article. In the meantime, by mid-July the story got even juicier: after the sound defeat ChatGPT suffered at the hands of the Atari 2600, there was another disaster. ChatGPT was challenged online by the world’s no. 1 chess player, Magnus Carlsen. The result? ChatGPT lost all its pieces and resigned. The Norwegian champion, on the other hand, didn’t lose a single piece, finishing the match in just 53 moves.

AI and the brain: a (complicated) love story 150 years in the making

Before we rush to declare the failure of artificial intelligence, let’s take a step back. The relationship between the human mind and machines didn’t begin with ChatGPT, or even with computers. It all started in 1847, when a certain George Boole had the brilliant intuition that human thought could be translated into mathematics: true/false, 1/0, on/off.

From there, it’s been a long dance of mutual inspiration. In 1943, McCulloch and Pitts looked at the neurons in our brain and thought: “Hey, what would happen if we built artificial networks that worked like this?” And so neural networks were born — the very ones powering ChatGPT today and letting us ask AI to write poems about our cats (now that’s innovation!).

But here comes the first plot twist: despite all this biological inspiration, the human brain and modern AI work in completely different ways. Our brain consumes about 20 watts — less than a LED bulb — yet it can recognize faces, drive cars, play Go and simultaneously think about what to make for dinner. An AI system that wants to beat a Go master, a 2,500-year-old game? It needs tens of thousands of watts. It’s like comparing an e-bike to a monster truck: both get you from A to B, but with slightly different fuel efficiency.

Does using ChatGPT make your brain lazier? MIT says yes

It’s an uncomfortable question, but a legitimate one. And the answer — at least according to MIT — is: kind of, yes.

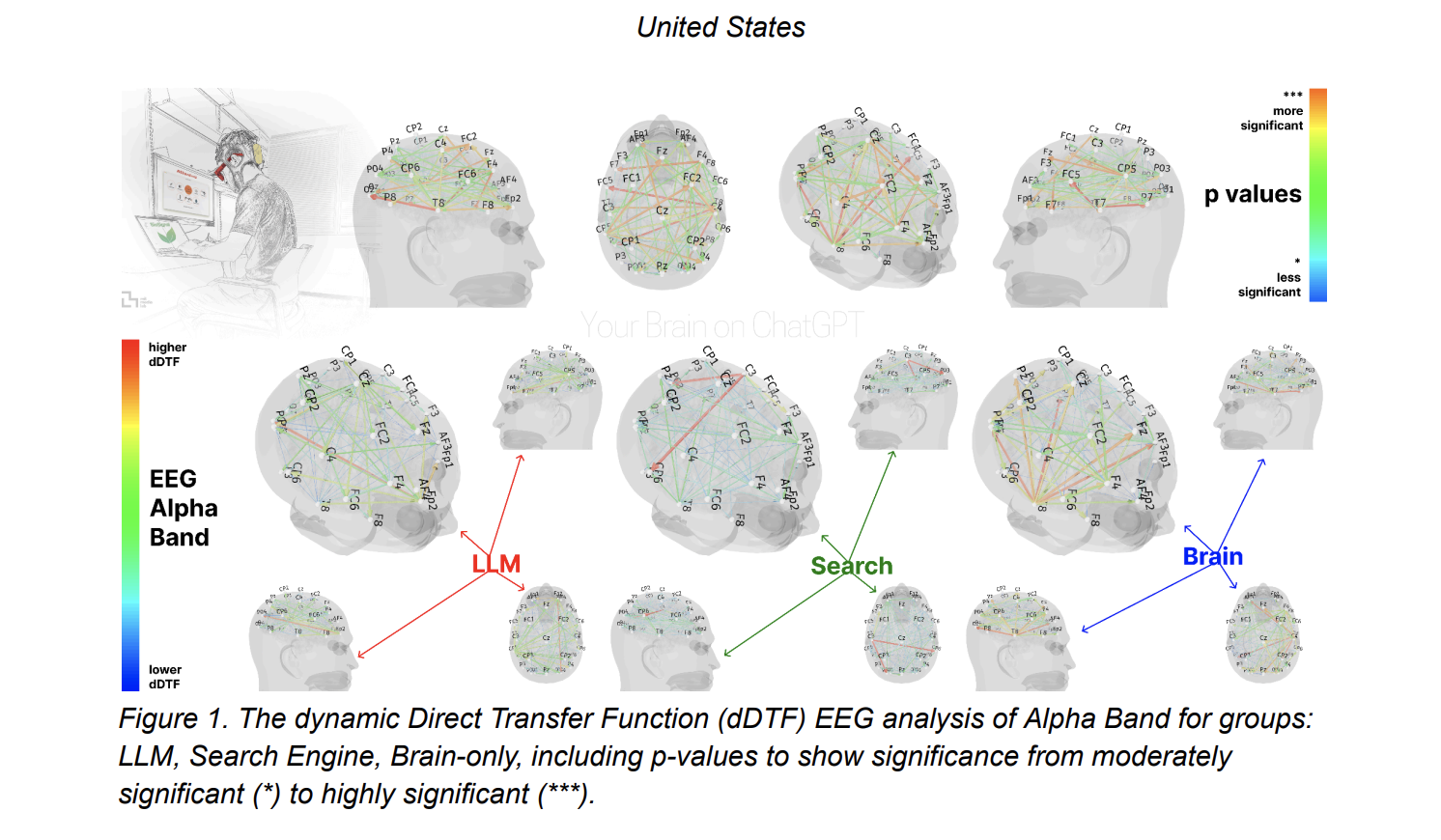

A recent study with a title that sounds like a pretty serious warning (“Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task”) tried to understand what happens to our minds when we let AI do the heavy lifting. Spoiler: the brain relaxes — maybe a little too much.

Researchers measured the brain activity of 54 students while they wrote essays, with and without AI. The result? ChatGPT made everything easier, yes, but it also “switched off” some areas of the brain linked to memory, planning and critical thinking. In practice: less effort = less activation = less engagement. And that’s not all: those who wrote with AI remembered their texts less and struggled more when they had to go back to writing without assistance.

This phenomenon has been called “cognitive debt”: the more you delegate, the less you train yourself to think on your own. A concept that sparked debate — also thanks to sensationalist headlines like “ChatGPT Is Making Us Dumber!”. In reality, as the information page DataPizza also explains clearly, the study doesn’t demonize AI, it invites us to use it with awareness. The real risk isn’t using AI per se, but using it passively and unconsciously, which in the long run can undermine our ability to think autonomously.

When AI makes mistakes like we do (and why that’s not necessarily bad)

Here’s where the story gets interesting. Recent studies have found that larger language models make reasoning errors that are surprisingly similar to our own human cognitive biases. AI falls into the same logical traps, uses the same mental shortcuts, and makes the same statistical mistakes we do when we need to make quick decisions.

But — and this is a “but” as big as a Google data center, which, by the way, consumes a ton of energy — how it gets to those errors is completely different from ours.

AI reasons on purely logical–mathematical bases, with no emotions, distractions, or that little inner voice telling us “maybe I should check if my washing machine is done instead of finishing this article.” That makes it incredibly effective at processing mountains of data without ever getting tired. But it also deprives it of that “tacit” understanding of the world that we acquire simply by living, breathing, and repeatedly banging our heads against everyday problems.

The human factor: creativity, intuition and that thing called “common sense”

This is the heart of the matter. A human being can make mistakes out of distraction (curse you, notifications…), but also possesses something no AI has yet replicated: the ability to have insights that seem to come out of nowhere, to think outside the box, to say “wait, something’s off here” even when all the data says otherwise.

Emotional intuition plays a crucial role in our decisions. As human beings, we instinctively understand when a situation calls for empathy, when moral values should override the numbers, when the social consequences of a decision matter more than algorithmic efficiency.

Today’s AIs can recognize the emotional tone of a text and simulate an empathetic response, but it’s like watching a robot actor playing someone in love: technically flawless, emotionally hollow. They don’t actually feel emotions, they have no consciousness, they don’t know what it’s like to wake up at 3 a.m. worrying about the future of the planet. And maybe that’s for the best.

Smart use of ChatGPT: when to delegate and when to keep your brain switched on

So, when does it make sense to use ChatGPT and when is it better to rely on our 20-watt analog brain? The answer isn’t “always” or “never,” but “it depends on what you’re doing, what you want to achieve — and how much you care about the planet.”

You can delegate to ChatGPT when:

- You need to analyze huge datasets that would drive any human crazy

- You need quick translations (even if they’re not perfect)

- You want to automate complex calculations or repetitive tasks

- You’re looking for brainstorming to expand on an idea

Keep your brain switched on when:

- You have to make decisions that involve values or ethical aspects

- You need to create something truly original, not just a remix of existing ideas

- You need a solid understanding of the human, social or emotional context

- You’re doing a task you could easily perform yourself (and why waste the extra energy?)

The (limited-time) golden age of free AI

Let’s enjoy this phase where ChatGPT is free and Google lets us use Gemini at no cost. Because it probably won’t last.

Experts agree: the current pricing structure of AI is not sustainable. Once the “gold rush” is over and the market stabilizes, higher costs are inevitable. Between stricter regulations (the European AI Act is constantly being updated), rising energy costs, and the need to generate real profits, we should be prepared to pay more for our almost-do-it-all digital assistants.

Here’s an interesting deep dive on this topic, starting from the question “What if AI is just another economic bubble waiting to burst?”. Link to the article.

The golden rules for a healthy relationship with ChatGPT

1. Get informed and experiment: don’t delegate blindly. Try things, test them, learn the limits. AI is powerful, not magical. 2. Consider the environmental impact: every complex request costs the planet energy. Always ask yourself: “Is this task really worth its weight in watts?” 3. Keep human control: for ethical, creative, or people-related decisions, the final say should always be yours. 4. Remember your superpowers: intuition, genuine creativity, emotional understanding, critical thinking — these are still exclusive skills of your biological brain.

The (sustainable) future of collaborative intelligence

We’re standing at a crucial crossroads. On one side, AI offers us extraordinary computational power. On the other, our brain still holds an evolutionary advantage of, oh, just a couple of million years in terms of energy efficiency, creativity, and understanding of the world.

The solution isn’t to pick a team, but to build smart collaboration. AI is incredibly useful when we use it for what it does best: calculations, analysis, automation. The human brain is irreplaceable when we use it for what it naturally excels at: intuition, ethics, genuine creativity, common sense.

ChatGPT’s defeat against the Atari 2600 is not proof that AI is pointless. It’s a reminder that specialized intelligence (human or artificial) often beats general-purpose intelligence, and that maybe our 20-watt brain still has a few aces up its sleeve that are worth playing.

After all, it was a human brain that invented both ChatGPT and the Atari 2600 back in 1977. And for now, that record is still unbeaten.

Sources used for this article

The information reported here is based on scientific studies and industry analyses updated to 2024 and 2025, including research published in Nature, NIST reports, environmental analyses on AI, and EU and US regulatory documents.

Nataliya Kosmyna et al. (MIT Media Lab), “Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task.” Link.

Futurism, “ChatGPT ‘Absolutely Wrecked’ at Chess by Atari 2600 Console From 1977.” Link.