Have you ever scrolled through Facebook and stumbled upon a picture that made you think, “What on earth is this?” If the answer is yes, you’re not alone. And that feeling of weirdness you had was probably more than justified.

If the answer is still no, you’re lucky. But we suggest you read this article anyway — so you’re ready when it does happen (because yes, unfortunately, it will).

We’re right in the middle of what experts call “enshittification” — a process through which digital platforms gradually degrade the quality of their services in the name of profit. And if you thought Facebook had already hit rock bottom, well… prepare to rethink that.

The latest chapter of this decline is called AI slop, and over the past months it has been flooding our feeds with increasingly sophisticated fake content, scams, and manipulation.

But what happens when artificial intelligence becomes the perfect accomplice for online scams?

And why does Meta seem to be doing so little to stop it?

What is enshittification, and why should we care?

The term **“enshittification”** — which we could brutally translate as “crap-ification” — was coined by Canadian blogger and digital activist Cory Doctorow to describe a precise and repeated pattern: 1) Platforms start by offering an excellent service to users; 2) gradually worsen the experience to benefit advertisers; 3) and finally squeeze advertisers themselves to maximize corporate profit.

The term has become so relevant that the Macquarie Dictionary — Australia’s main dictionary — selected it as the 2024 Word of the Year, acknowledging a phenomenon many of us had already sensed, even without having a word for it.

Facebook is perhaps the clearest case study of enshittification. Remember when it showed you mainly posts from your friends and family? Those days are long gone. According to a Harvard study, from Q2 2021 to Q3 2023 the share of Feed content coming from “unconnected posts” (i.e., from pages and profiles users don’t follow) went from 8% to 24%. On paper, that could seem positive: discovering content aligned to our interests should be the goal.

But there’s a problem: Facebook’s latest algorithm updates, designed to compete with TikTok and push content deemed “engaging,” have opened the door to something far more… suspicious.

AI Slop: the new frontier of garbage content

**“AI Slop”** refers to the **flood of low-quality, AI-generated content taking over social media**, Facebook in particular. These are images, text, and videos created using AI tools — often obviously **fake**, completely **nonsensical**, yet strangely effective at capturing attention. Much like “brain rot” content, itself an **Oxford 2024 Word of the Year**, which we covered in this article.

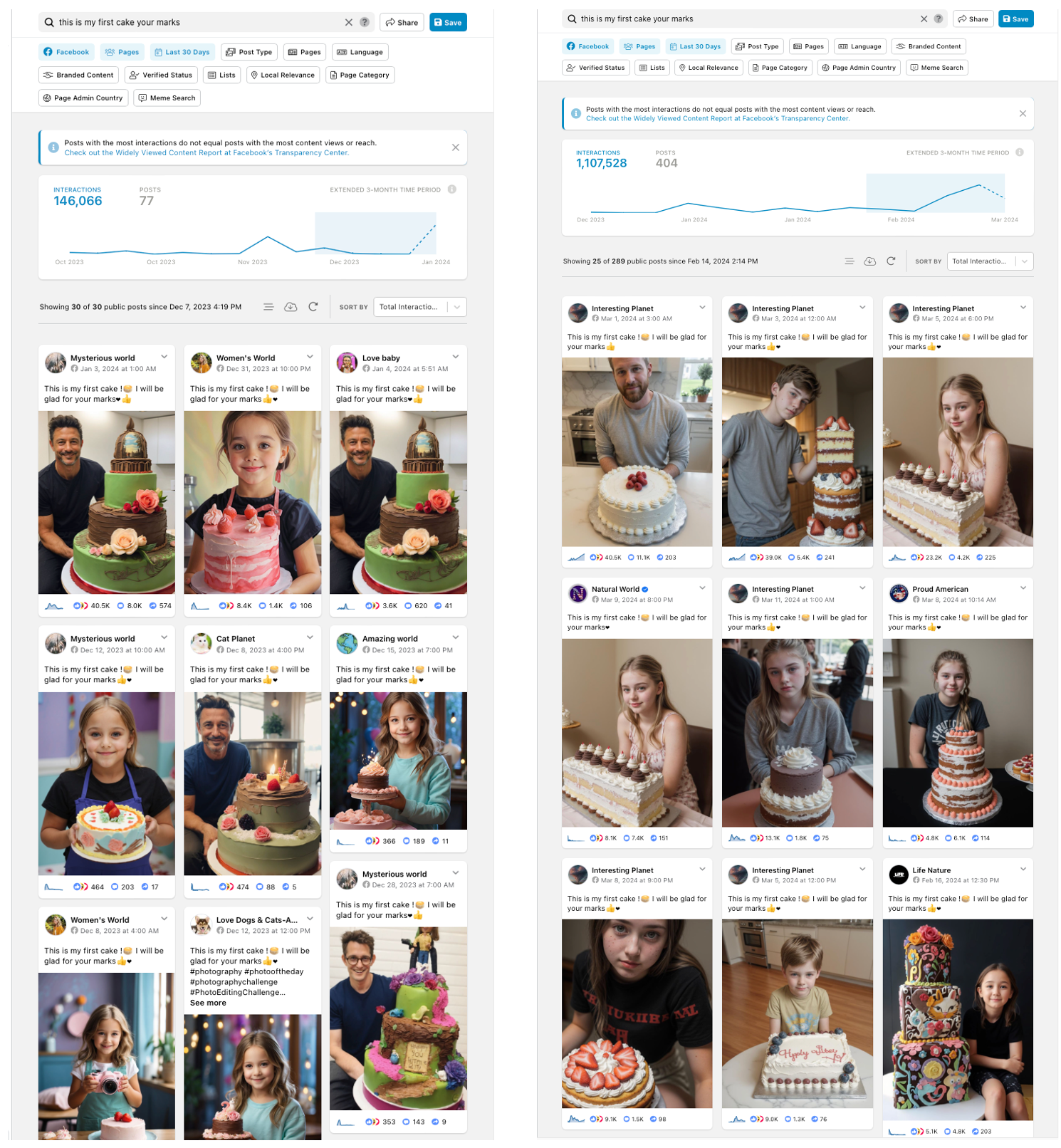

AI Slop is now so widespread that you’ve probably come across it without even realizing. Have you ever seen, for example:

- Elderly people or children with birthday cakes and captions like “This is my first cake! I will be glad for your marks.”

- People showing furniture, paintings or sculptures with the caption “Made it with my own hands”

A simple post from the “Leandro Cañete” Facebook account. Over 16,000 reactions, nearly 1,700 comments, and 500 shares.

- Bizarre and borderline blasphemous images of Jesus made of shrimp or crabs (yes, really — the infamous Shrimp Jesus / Crab Jesus)

Posted by the Facebook group “Love God & God Love You.” A Jesus worshipped by crabs… 213,000 reactions.

- Animals “covered in dirt” being “cleaned” by anonymous good samaritans — always with 4K cameras in hand — who somehow have endless time to rescue these creatures.

These are classic examples of AI Slop: endlessly recycled content using the same phrases and formats. And yet, it works. So why not… try it ourselves?

How to recognize AI scams on Facebook

Spotting an authentic post from an AI-generated one is getting harder, but there are still some tell-tale signs:

Anatomical mistakes

AI images often contain subtle glitches — strange elbows, odd foot positions, distorted hands. While AI is worlds better than last year’s six-fingered monsters, errors persist.

Blurry or undefined faces

Subjects often look smudged or strangely melted into their surroundings.

Repetitive, generic captions

If you see the same emotional phrase duplicated across dozens of pages, it’s likely automated. Scammers rely on formulas that drive engagement.

Suspicious follower spikes

Many pages explode overnight after rebranding. One example went from 9,400 followers as a music band to over 300,000 in a week after renaming itself “Life Nature.”

The scam business model: why doesn’t Meta intervene?

This is where things get alarming. According to the Harvard study, 120 Facebook pages frequently posting AI-generated images received **hundreds of millions of engagements and impressions**. In Q3 **2023**, **one of Facebook’s 20 most-viewed posts was an AI-generated image** with 40 million views — posted by the account “Cafehailee,” which **did not label the content as AI-generated**.

The image, now removed, was posted by “Cafehailee.” The account, as of october 2025, still exists and currently has 350,000 followers.

The problem? This system generates massive profits.

Investigations show that a significant portion of Facebook’s ad revenue may come from these very scams, which thrive thanks to AI Slop.

Spam creators can earn thousands of dollars per month posting AI content on Facebook, taking advantage of Facebook’s public content “performance bonuses” (available until 31 August 2025). Unsurprisingly, YouTube is full of tutorials teaching how to monetize this kind of content — a trend heavily analyzed by Cory Doctorow in a recent talk.

https://www.youtube.com/watch?v=_Ai-fC-2Bpo[/embed]

Meta’s clumsy attempts to contain the phenomenon

In April 2025, **Meta finally acknowledged the problem**, publishing a statement titled “Cracking Down on Spammy Content on Facebook.” The company announced measures such as limiting the reach of spammy accounts, removing fake networks, and protecting original creators.

But what does this mean in practice? Meta promised to reduce visibility for accounts posting “long, distracting captions with excessive hashtags” or captions unrelated to the content. In 2024, they removed more than 100 million fake pages that artificially inflated their reach.

Impressive, right?

Maybe. But the problem is far from solved. Many journalists have noticed that once they began investigating the topic — thereby training Facebook’s algorithm — their feeds were suddenly flooded with AI Slop, suggesting that the platform is still actively promoting this content.

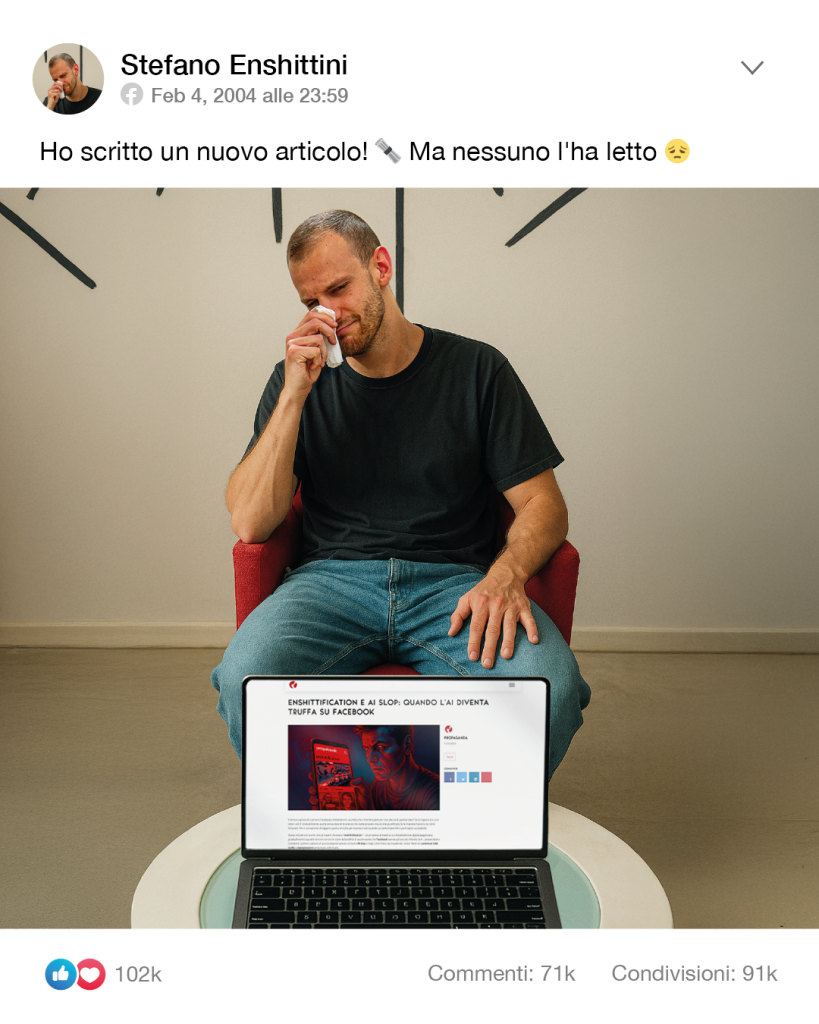

The engagement paradox: when fake outperforms real

Here’s the scary part: **many users do not realize the images are AI-generated**, reacting and commenting as if they were genuine. Praise for kids that don’t exist, sympathy for elderly people who were never born, enthusiasm for cakes that were never baked…

Some more savvy users have begun posting warnings and sharing infographics explaining how to spot AI content. But for now, they’re a minority.

This creates a disturbing paradox: fake content generates more engagement than real content, feeding a vicious cycle where the algorithm keeps rewarding it.

And AI Slop isn’t just a Facebook problem.

It’s spreading across LinkedIn, Instagram, TikTok — everywhere. Facebook just happens to be the most fertile ground, likely because of its older and less digitally literate demographic.

How to protect yourself

In this scenario, **information becomes our strongest weapon**. Once again, the solution is a more **critical and conscious use of social platforms**.

A few practical tips:

- Always verify the source of what you share

- Beware of images that look too “perfect” or hyper-realistic

- Be skeptical of pages with overnight follower explosions

- Report obviously fake or scammy content

- Share these insights with friends and family — especially those less digitally savvy

A reflection on the future of digital communication

As we write this article, it’s clear we’re standing at a crucial crossroads for the future of digital communication. Enshittification is not just a technical process — it’s a symptom of the direction social media has taken in recent years.

AI Slop may be the most worrying evolution yet: not only are our data extracted and sold, but now our attention is being hijacked by entirely artificial content, created solely to generate profit.

The question isn’t whether we can stop this — it may already be too late — but whether we can develop the cultural antibodies needed to navigate a world where the line between real and fake grows increasingly blurry.

Because ultimately — as with most technological shifts — the real issue isn’t AI itself, but how we choose to use it.

And as long as we value engagement more than truth, we will keep feeding the digital parasite eroding our critical and cognitive abilities.

If this article was helpful, share it with friends and family: information remains our best defense against the enshittification of the web. And remember: in a world full of AI slop, every piece of genuine human content becomes an act of resistance.